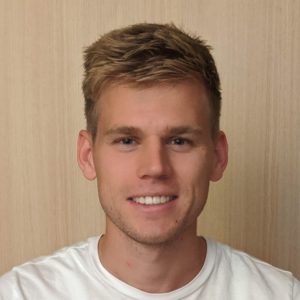

Stanislav Fort

Founder and Chief Scientist of AISLE

In the 17th edition of Better AI Meetup, we will look at robustness and efficiency in large-scale AI. As foundation models become more powerful, the dual challenges of ensuring their security and optimizing their computational footprint have never been more critical.

To tackle these issues head-on, join us for our first-ever joint event. We’re bringing our communities together for a special Czecho-Slovak evening of deep dives and quality networking, featuring Stanislav Fort (AISLE™) and Vladimír Macko (GrizzlyTech) covering both sides of the coin: from the high-level challenge of adversarial attacks right down to the metal with GPU-aware optimization.

Supported by the Slovak Diaspora Project under slovaks.ai, we’re excited to meet the Slovak AI community in person in Prague. While an online option is available (the link to the stream will be sent on November 12th), we hope to see as many of you as possible on-site for the full experience.

Founder and Chief Scientist of AISLE

Dr Stanislav Fort is the Chief Scientist of an AI research startup, specializing in robustness, interpretability, safety, and cybersecurity. He received his PhD in 2022 from Stanford University in the Neural Dynamics and Computation Laboratory with Prof. Surya Ganguli. In the past, Stanislav spent time at Google Brain as an AI Resident, worked on the Claude model at Anthropic, and led the language model team at Stability AI. He received his Bachelor’s and Master’s degrees in theoretical physics from the University of Cambridge. Dr Fort has published over 35 academic papers and which have received over 10,000 citations.

Talk: Security, robustness and interpretability of large-scale AI models

Adversarial attacks pose a significant challenge to the robustness, reliability, alignment and interpretability of deep neural networks from simple computer vision to hundred-billion-parameter language models. Despite their ubiquitous nature, our theoretical understanding of their character and ultimate causes, as well as our ability to successfully defend against them, is noticeably lacking. This talk examines the robustness of modern deep learning methods and the surprising scaling of attacks on them, and showcases several practical examples of transferable attacks on the largest closed-source vision-language models out there. I will conclude with a direct analogy between the problem of adversarial examples and the much larger task of general AI alignment.

Founder of GrizzlyTech

With over a decade of experience in machine learning, Vladimir began his journey in the field working with startups. He then joined Google AI as a Machine Learning Researcher, working on large-scale ML for optimization problems and autoML. Over the past six years, Vladimir has collaborated with a wide range of organizations to bring their machine learning visions to life. Among others, he contributed to privacy-preserving authentication systems for a biometric company, time series classification for clinical studies, a resume grading system for a career portal and achieving top 50 NIST with a facial recognition client. Currently, Vladimir focuses on pruning of neural networks, making them smaller and faster with the company GrizzlyTech.

Talk: Model compression and properties of modern GPUs

The rapid scaling of deep learning models has fueled breakthroughs across domains, but has also created a widening gap between algorithmic innovation and hardware efficiency. Model compression through pruning, quantization, distillation, and beyond, offers a principled way to bridge this gap, but its effectiveness is tightly coupled to the evolving properties of modern GPUs. This talk explores the interplay between compression techniques and GPU architectures, highlighting both theoretical insights and practical considerations. We will discuss:

Language: English

In the 17th edition of Better AI Meetup, we will look at robustness and efficiency in large-scale AI. As foundation models become more powerful, the dual challenges of ensuring their security and optimizing their computational footprint have never been more critical.

To tackle these issues head-on, join us for our first-ever joint event. We’re bringing our communities together for a special Czecho-Slovak evening of deep dives and quality networking, featuring Stanislav Fort (AISLE™) and Vladimír Macko (GrizzlyTech) covering both sides of the coin: from the high-level challenge of adversarial attacks right down to the metal with GPU-aware optimization.

Supported by the Slovak Diaspora Project under slovaks.ai, we’re excited to meet the Slovak AI community in person in Prague. While an online option is available (the link to the stream will be sent on November 12th), we hope to see as many of you as possible on-site for the full experience.

In the 16th edition of Better AI Meetup, we will once again discuss regulation and AI, this time focusing on generative AI when it is a part of Very Large Online Platforms.

Martin Husovec, from the London School of Economics and Political Science, will show us how the Digital Services Act may affect the use of chatbots such as ChatGPT in the EU. ChatGPT isn’t currently classified as a Very Large Online Platform (VLOP) under the DSA—but that could change, bringing stricter rules on transparency, risk assessments, and algorithmic accountability.

In the second part of the evening, we will welcome the finalists of the 2nd Trustworthy AI Awards. You will have a chance to discuss with them how to design and deploy AI-based solutions that are ethical and trustworthy.

The 15th edition of Better AI Meetup will be held in Brno! The university in Brno has a deep focus on modern technologies – and a sizeable Slovak diaspora. We will be talking about different aspects of language models and their role.

In the first presentation, Martin Fajčík from FIT at VUT, Brno will introduce the very first benchmark for LLMs in the Czech language: BenCzechMark. Small languages are not well-represented in large language models, which creates specific challenges when using them in real-life applications – and proper benchmarking can help choose the right model for the job.

In the second part of the evening, Jakub Špaňhel from Innovatrics will show us how they used natural language supervision to increase accuracy and robustness in the process usually done only via image processing – liveness checks and deepfake detection. In this case, machine learning benefits from natural language supervision even in image-related tasks.

While neural networks and other AI approaches are already used for many tasks in business and academia, making them compliant with the nascent AI regulations is laced with guesswork. In this instalment of Better AI Meetup, we will focus on making AI technically compliant with incoming regulations. Our main speaker, Pavol Bielik from LatticeFlow AI, will introduce LLMs’ first open-source compliance-centered evaluation framework.

In the second part of the evening, Igor Janos, head of R&D at Innovatrics, will introduce the different approaches he used to create an entirely new neural network able to accurately compare photographed fingers with rolled fingerprints for reliable identification of persons.

Although chatbots are the most prominent examples of using AI in banking, there is a world of unseen improvements behind the walls of banks. For example, processing Slovak language in call centers poses unique challenges. How do the banks see the uses of modern AI tools in everyday interactions? What are the possibilities, risks and strategies of using LLMs and generative AI for facing customers without losing their trust? Tune in to Better AI Meetup 13 to find out!

We will be discussing the uses of AI with the specialists from the largest Slovak banks and we will even welcome a guest from the banking regulator to shed light on their stance on using AI in financial services.

In the June installment of Better AI Meetup you’ll get to know the finalists of the inaugural AI Awards! You can learn how AI has helped create their products, what obstacles they faced and what future they see for AI-driven solutions in the future.

During the 11th volume of Better AI Meetup, we will be talking about how to successfully use AI to identify, qualify and rate online content, spot fraudulent activities and data and make sense of vast amounts of data available.

Our presenters will show us how they use AI & LLMs to spot generated content or misinformation and how LLMs can be harnessed to produce structured, verified, actionable information out of the available data.

The AI Act is quickly becoming a reality, affecting every company and researcher that has something in common with AI. What to expect from the upcoming legislation? Who will be affected? How to prepare for the impact of the regulation?

Our guests will be talking about how to use the AI Act to create trustworthy, responsible AI applications as well as how to proactively prepare companies and research teams for the regulation. There’s no better place for such a discussion than the Better AI Meetup!

Medicine is still regarded as the domain where humans rule. But AI is proving a valuable tool in their hands. If used correctly, it has the potential to see better than the best-trained physician and can spot problems before they grow too dangerous.

First, we will be talking about the advances AI has already reached in radiology and what opportunities it can bring in the very near future. Next, we will see the challenges of designing a medical AI so that it passes the regulatory approval.

Join us to see how AI will be helping you get healthier in the coming future!

Having conversations with AI is being touted as the future of our communication with computers. For the conversations to be both meaningful and easy to understand, they need to be properly trained in as many languages as possible, including those with smaller numbers of speakers. Also, the cheaper the training, the better.

During the 8th Better AI Meetup, we will be addressing exactly these challenges: evaluating quality of language models, training those models without breaking the bank and the future expectations from language models in the business and elsewhere.

The explosion of ChatGPT and GPT4 into mainstream applications may seem like we have solved all there is regarding language. However, there are still challenges that AI struggles with.

In this edition of Better AI Meetup we will be talking about speech processing as well as designing ways to evaluate machine learning algorithms for accuracy and speed and choose a proper one for a particular use case.

The advent of generative models such as DALL-E, Midjourney or Stable Diffusion have brought a deluge of AI-generated images. The question we want to answer is: How to harness their power for innovative uses that improve quality of life?

We will present two practical examples on how to use generative AI for improving datasets for machine learning.

Lukáš will show us how to use AI for annotation and labeling of large datasets, while Igor has developed novel ways to generate fingerprints for training fast, accurate models for fragmented fingerprints. In both cases, AI is helping improve the accuracy of models that then help automate administrative processes or even solve crimes.

Machine Learning is used not only to detect new malware, but also to create more efficient, harder to detect threats. Will the pros of machine learning outweigh the cons or will the cybersecurity equilibrium deteriorate? And how to explain what the AI does when it makes decisions? This time, we will look at outsmarting cyber-criminals as well as understanding the AI itself.

Ever wanted to start utilizing federated learning? Professor Peter Richtarik from King Abdullah University of Science and Technology will give us a detailed lecture on the role of local training in federated learning and will answer any questions you may have.

Federated Learning refers to machine learning over private data stored across a large number of heterogeneous devices, such as mobile phones or hospitals. In an October 2020 Forbes article, and alongside self-supervised learning and transformers, Federated Learning was described as one of three emerging areas that will shape the next generation of AI technologies. In this talk I will explain how we recently resolved one of the key open algorithmic and mathematical problems in this field.

What happens when research, its practical applications and human factor intersect? How to train the machine learning models to expect the unpredictable events that are inevitable in real life? This time we’ll look at the challenges the AI and ML technologies face when put to practical use.

This time, we want to give you insight into how AI and machine learning methods are put to practical use at Innovatrics for training new biometric algorithms and in Swiss Re, one of the largest re-insurance companies in the world, to develop new, innovative products.

Machine learning (ML) helped us to solve such complex tasks as face recognition, however when it is done on a large scale it relies on many high-effort data management tasks. We will describe our winding road from images in folders and labels stored in CSV files to structured versioned datasets with reproducible data processing pipelines. Moreover we mention how we deal with data versioning / validation / quality evaluation, reproducible ML trainings and experiment management.

The opening presentation is centered on the most recent success of AI research in Slovakia – the SlovakBERT model. Creating a neural language model for languages with limited available corpus can be challenging. We will explain what are the main obstacles when creating such model and what its practical applications are. The second presentation will focus on why AI is becoming mainstream and what it means for the future of the technology.